Nothing draws more disproportionate hype this time of year relative to student interest than the release of global university rankings. Admittedly, students enjoy seeing the results, but their attention rarely lingers beyond cheeky messages to a friend at UCD. The most recent release has seen the Times Higher Education (THE) rankings drop Trinity nine places to 138th, and should leave many wondering what that number actually means.

The short answer is nothing, on it’s own. Absent any context, that number is a like a sticker price for an object you’ve never seen or heard of before. The tag might say 10 quid, but is that a bargain, or was it made for pennies?

The proper context for university rankings is their methodology. I’m going to focus on THE rankings here, because they are the most recent and the most unfavourable to Trinity, but the logic is often similar in other tables. For THE, the five major categories are International Outlook, Research, Citations, Industry Income, and Teaching. Looking closely at these scores tells the story of why a university’s ranking changed, and more importantly whether its students should care.

University rankings are valuable, but only if you are interested in the exact things that they measure.

Trinity scores very highly on International Outlook (which must’ve put a spring in Paddy Prendergast’s step). This number uses domestic-to-international ratios for students and staff, on the assumption that more diversity means taking the best of both groups from around the world. I hesitate to make any specific claims to the contrary, but that assumption would seem to require further investigation. At the very least, it is easy to argue that diversity is intrinsically valuable to students by expanding their cultural exposure.

Next is Research, as in the volume and reputation thereof, as well as the income it generates. Here Trinity suffers primarily from its ties to the Irish government, whose recent cuts to the education budget have been both necessary and painful. Undergraduate students, though, should notice that declining research output and income is really only meaningful to those pursuing advanced degrees, especially since it’s not the result of a decline in underlying educational value. This is the first of the three scores that are useful almost exclusively to academics, and therefore undermine the credibility of a single overall ranking.

Trinity’s relative success in Citations points to the sustained quality of its staff. Even with shrinking budgets, the university’s published work is cited by other scholars at an extraordinary rate. That Trinity scores so well in the highest weighted criterion says plenty about overblown fears of the university’s decline. Again, however, this metric is really only valuable within the academic sphere, unless you want to argue that academic citations correlate with quality of undergraduate education. Students would likely counter that a brilliant researcher often lacks the charisma or ingenuity to thrive in the classroom.

Looking closely at these scores tells the story of why a university’s ranking changed, and more importantly whether its students should care.

Industry Income is a lightly weighted and somewhat unimportant score, in which Trinity still manages to do poorly. Essentially this rates how the commercial marketplace values a university’s research and innovations, in monetary terms. This is the third score that assumes a positive relationship between postgraduate research and overall educational value. By now this assumption should be at least questionable enough for us to wonder why it underpins three-fifths of the overall ranking.

The final category is Teaching. I’ve saved it for last because it is the most relevant score for the greatest number of students, but also the trickiest to quantify. The score comprises a number of correlative measures, like ratios of students-to-staff and doctoral-to-bachelor’s degrees awarded. But the most highly weighted factor is the result of an academic reputation survey given to a select group of academics.

Notably, the survey completely ignores a school’s reputation among companies that might actually hire its graduates. That’s how THE’s reputation rankings place Pennsylvania State University at 39th (go Nittany Lions!), while Ivy League bastion Brown University checks in at 81st. I’m betting even non-Americans can guess which of those schools produces higher-earning graduates.

These issues, and so many more, are not captured by meaningless rankings, or even the complex metrics that determine them.

If you’ve gotten somewhat lost in the numbers by now, you’re starting to see what little relevance these metrics hold in the day-to-day experience of students. They overly emphasize things that are important for students looking to remain in academia, which is to say not many of them. This wouldn’t be too problematic if not for the fact that all of the College’s recent decisions seem aimed at driving up the ranking, regardless of it’s tenuous connection to improving student life or job prospects.

Meanwhile, students can’t find accommodation because Trinity has deemed building new dorms unimportant, and the Sports Centre has to curb overcrowding by further squeezing those who don’t even use it. These issues, and so many more, are not captured by meaningless rankings, or even the complex metrics that determine them. Instead they are felt only by the students who will one day have to explain to a potential employer why their school isn’t ranked as highly as the last guy’s.

University rankings are valuable, but only if you are interested in the exact things that they measure. For most students, comparing graduate earnings or employment rates would be a far more helpful way to evaluate schools. And that’s assuming you can objectively rank different educations in the first place. The media and society far too often extrapolate a single ranking to make a point that the numbers do not support. Understanding the data behind the headline is the first step in determining its value, or lack thereof.

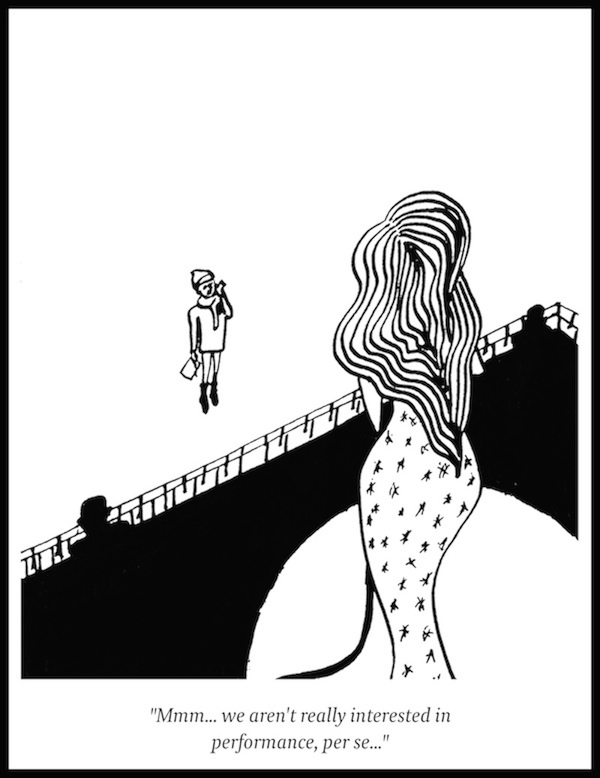

Illustration by Ella Rowe for the University Times